The system offers audible information about common obstacles such as signs, tree branches and pedestrians, as the user moves around

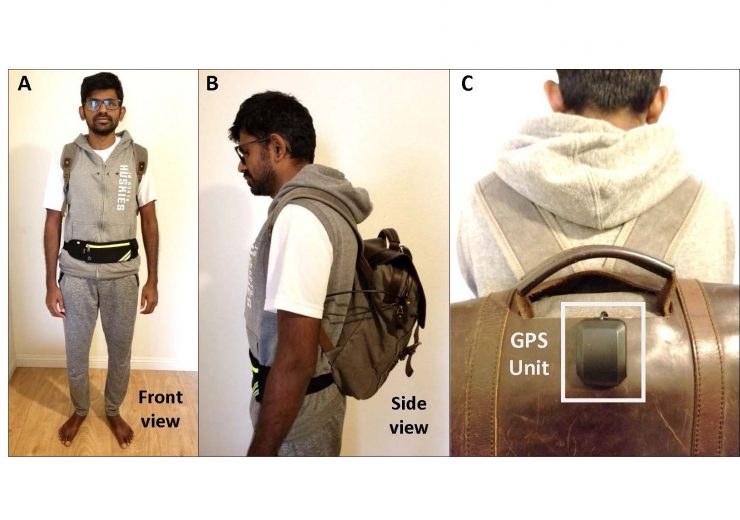

AI-powered, voice-activated backpack for visually impaired. (Credits: Business Wire.)

The University of Georgia researcher Jagadish Mahendran, along with his team, has developed an artificial intelligence (AI)-powered, voice-activated backpack to help the visually impaired navigate.

The backpack is designed to detect daily challenges such as traffic signs, hanging obstacles, crosswalks, moving objects and changing elevations, all through a low-power, interactive device.

The navigation system, contained inside a small backpack, features a host computing unit, a vest jacket conceals a camera and a fanny pack that holds a pocket-sized battery pack that facilitates around eight hours of usage.

University of Georgia Institute for Artificial Intelligence engineer Jagadish Mahendran said: “Last year when I met up with a visually impaired friend, I was struck by the irony that while I have been teaching robots to see, there are many people who cannot see and need help.

“This motivated me to build the visual assistance system with OpenCV’s Artificial Intelligence Kit with Depth (OAK-D), powered by Intel.”

The navigation system uses Luxonis OAK-D spatial AI camera, attached either to the vest or fanny pack and is connected to the computing unit in the backpack.

OAK-D unit is a unique and powerful AI device that works on Intel Movidius VPU and the Intel Distribution of OpenVINO toolkit for on-chip edge AI inferencing.

The unit is capable of running advanced neural networks while providing advanced computer vision functions and a real-time depth map through its stereo pair, along with colour information from a single 4k camera.

Bluetooth-enabled earphones help the user interact with the system through voice queries and commands, to which the system responds with verbal information.

The navigation system audibly conveys information about common obstacles including signs, tree branches and pedestrians, as the user moves around.

It also warns of upcoming crosswalks, curbs, staircases and entryways.

Luxonis founder and chief executive officer Brandon Gilles said: “Our mission at Luxonis is to enable engineers to build things that matter while helping them to quickly harness the power of Intel AI technology.

“So, it is incredibly satisfying to see something as valuable and remarkable as the AI-powered backpack built using OAK-D in such a short period of time.”

Intel technology advocacy and AI4Good director Hema Chamraj said: “It’s incredible to see a developer take Intel’s AI technology for the edge and quickly build a solution to make their friend’s life easier. The technology exists; we are only limited by the imagination of the developer community.”